Introduction

What Big Data problem are we trying to solve?

- Class 1: Need only part of data, so extract from the whole and then operate on extracted data; eg., extracting a few dozen genes of interest, finding all intervals that overlap with a chromosomal region,

- Class 2: Need to operate on all the data, but can operate independently on parts; eg., computing over 1Mb bins on the genome, modeling over independent studies

- Class 3: Need to operate on all the data, but need to work on all at once (may be for performance reasons, also); eg., distance matrix calculations, pairwise correllations

Where do data live?

- Data warehouse

- Local disk

- Cloud storage

- R objects

- http download

- Web API

How does the “user” interact with data service?

Data availability can be thought of as a service. The service might simply be the file system, but it could be more complex.

- Local file system (VariantAnnotation, rtracklayer)

- Local embedded server/client (rhdf5, RSQLite)

- User-managed freestanding server/client (dbplyr, RPostgreSQL, rmongodb)

- Third-party managed server/client (bigrquery)

- Bioc-managed server/client (ExperimentHub)

How “rich” are data services with respect to analytical capabilities?

Data services vary in the complexity of operations they enable. A filesystem, for example, has no “smarts” in that it delivers bits-and-bytes as asked. A more complex system might include indexing capabilities (VCF files, hdf5). More complexity again comes with systems like MongoDB and PostgreSQL. At the level of Bigquery, parallelism and rudimentary machine learning is possible. At the extreme are projects like Apache Spark that can serve as distributed analysis engines over arbitrarily large datasets.

Data services cater to different forms of data.

- Arbitrary data types: file systems and object storage

- Record-based databases: NoSQL (MongoDB) and hybrid databases (PostgreSQL, Bigquery)

- Columnar databases: Bigquery, Cassandra, good for distributing data over “nodes”

- Arrays: TileDB, HDF5

Bioconductor Packages

rhdf5

- DependsOnMe: GenoGAM, GSCA, HDF5Array, HiCBricks, LoomExperiment

- importsMe: BgeeCall, biomformat, bnbc, bsseq, CiteFuse, cmapR, CoGAPS, CopyNumberPlots, cTRAP, diffHic, DropletUtils, EventPointer, FRASER, GenomicScores, gep2pep, h5vc, HiCcompare, IONiseR, MOFA, phantasus, PureCN, ribor, scone, signatureSearch, slinky

- suggestsMe: edgeR, slalom, SummarizedExperiment, tximport

DelayedArray

- DependsOnMe: DelayedDataFrame, DelayedMatrixStats, GDSArray, HDF5Array, rhdf5client, singleCellTK, SummarizedExperiment, VCFArray

- importsMe: batchelor, beachmat, bigPint, BiocSingular, bsseq, CAGEr, celaref, celda, ChIPpeakAnno, clusterExperiment, DEScan2, DSS, ELMER, FRASER, GenoGAM, GenomicScores, glmGamPoi, hipathia, LoomExperiment, mbkmeans, methrix, methylSig, minfi, netSmooth, PCAtools, RTCGAToolbox, scater, scDblFinder, scMerge, scmeth, scran, signatureSearch, SingleR, VariantExperiment, weitrix

- suggestsMe: BiocGenerics, gwascat, iSEE, MAST, S4Vectors, SQLDataFrame

restfulSE

- DependsOnMe: tenXplore

- importsMe: NA

- suggestsMe: BiocOncoTK, BiocSklearn

Big data frameworks and toolkits

Loom

Loom is an efficient file format based on HDF5 for very large omics datasets, consisting of a main matrix, optional additional layers, a variable number of row and column annotations, and sparse graph objects.

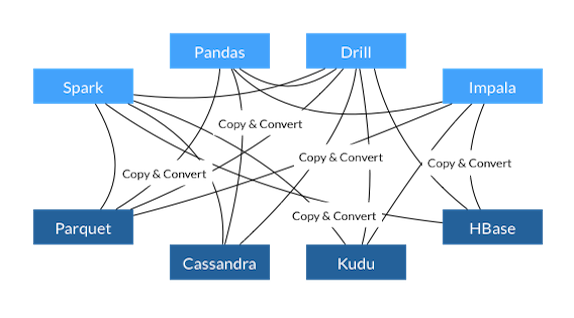

Apache Arrow

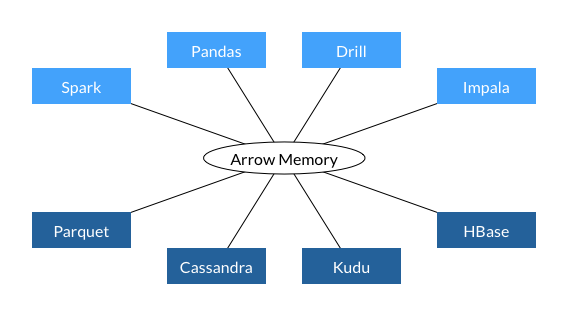

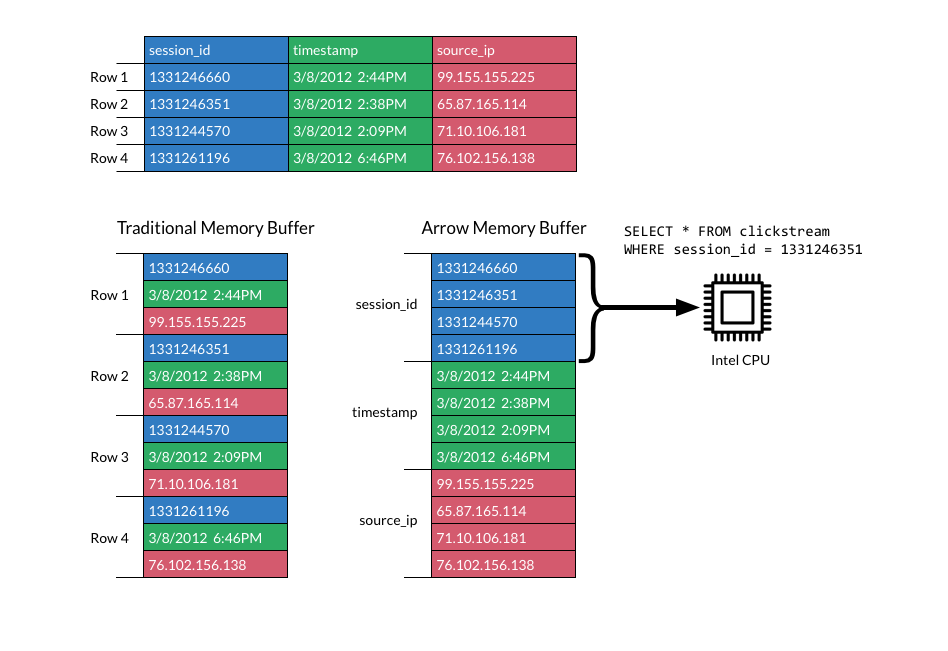

Apache Arrow is a cross-language development platform for in-memory data. It specifies a standardized language-independent columnar memory format for flat and hierarchical data, organized for efficient analytic operations on modern hardware. It also provides computational libraries and zero-copy streaming messaging and interprocess communication. Languages currently supported include C, C++, C#, Go, Java, JavaScript, MATLAB, Python, R, Ruby, and Rust.

Performance Advantage of Columnar In-Memory

Columnar memory layout allows applications to avoid unnecessary IO and accelerate analytical processing performance on modern CPUs and GPUs. ]

.right-column[

]

]

TileDB

From the TileDB website:

- TileDB efficiently supports data versioning natively built into its format and storage engine. Other formats do not support data updates or time-traveling; you need to build your own logic at the application layer or use extra software that acts like a database on top of your files.

- TileDB implements a variety of optimizations around parallel IO on cloud object stores and multi-threaded computations (such as sorting, compression, etc).

- TileDB is also “columnar”, but it offers more efficient multi-column (i.e., multi-dimensional) search. Think data.frame.

- With TileDB, you inherit a growing set of APIs (C, C++, Python, R, Java, Go), backend support (S3, GCS, Azure, HDFS), and integrations (e.g., Spark, MariaDB, PrestoDB, Dask), all developed and maintained by the TileDB team.

TileDB R package

Others

Bigquery

Elasticsearch

Apache Drill

Combinations

Building these will usually require a custom API built and maintained centrally.

Elasticsearch + AnnotationHub

- item-level indexing of genomic coordinates and attributes

- Return objects or records, for example

Elasticsearch + ExperimentHub

- Deep study and sample-level indexing such as number of samples, sample attributes (ontology?), organisms, technologies

- Return objects

TileDB + ExperimentHub (via DelayedArray and SE)

av_* and Elasticsearch/indexing

Questions

- What are the use cases?

- How do we measure success?

- For client and centrally-managed server models, is there expertise to implement?

- Should we get into the “functionality-as-a-service” game?

- Are there simpler approaches to follow?

- Bioc XSEDE allocation

- Document AnVIL Big Memory machines

- How can we expose new and existing functionality?

- What role does education (vs hands-on-keyboard) play in advancing the cause?

Notes

- EDAM Ontology: EDAM is a simple ontology of well established, familiar concepts that are prevalent within bioinformatics, including types of data and data identifiers, data formats, operations and topics. EDAM provides a set of terms with synonyms and definitions - organised into an intuitive hierarchy for convenient use.